When it comes to detecting fraud, context is everything. But how do you evaluate the behavior of a user you know almost nothing about?

This dilemma has become a key pain point for many businesses struggling with new account fraud — a rapidly growing tactic where fraudsters masquerade as legitimate users or use a combination of real and synthetic identity data in order to create accounts for malicious purposes.

Because the identity data they provide is fake — and because businesses have little data about the user’s behavioral patterns that they can leverage to spot anomalies — new account fraud can be difficult to detect. However, with sophisticated machine-learning techniques, enterprises can gain protection from this rising threat.

This blog post will delve into the difficulties of detecting new account fraud, the machine-learning techniques that Transmit Security uses to prevent fraudsters from opening accounts and how we develop, analyze and monitor our models to ensure their efficacy.

By analyzing the behavior of users during the registration process in our customers’ environments, we are able to determine which data is most relevant in distinguishing between legitimate and malicious registrations.

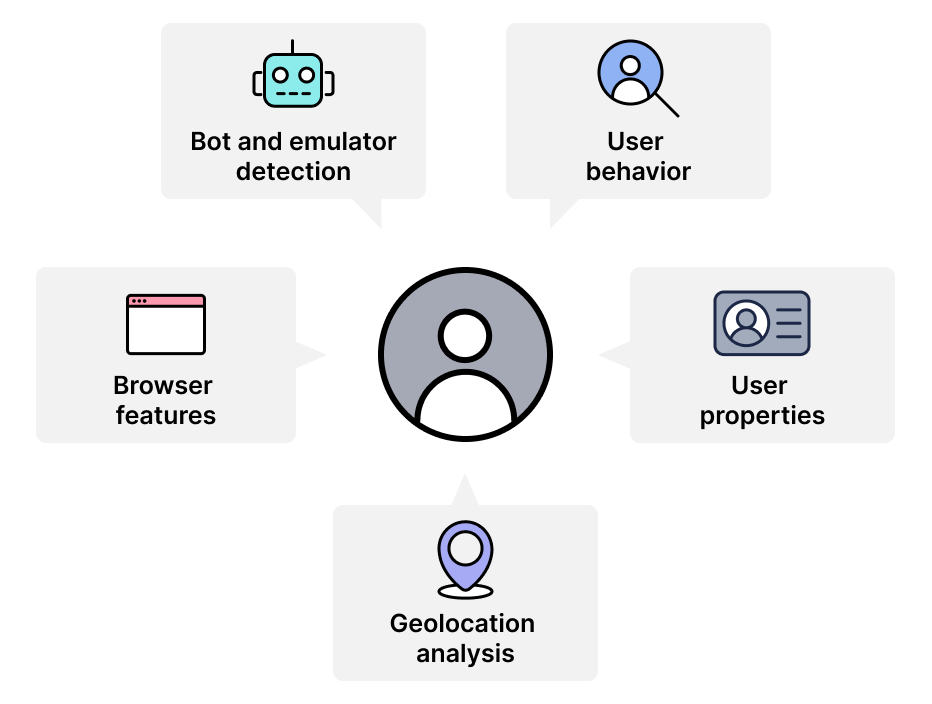

User behavior

Whether new account fraud is being carried out by humans, bots or human-assisted bots, their behavior will deviate from the average behavior of customers during login. Legitimate users don’t need to double-check personal details like their name, email or phone number, but fraudsters typing in their details might commit excessive typing errors, demonstrate slow or inconsistent typing speed, or cut-and-paste information into registration forms.

Conversely, fraudsters attacks at scale may be highly familiar with a given application’s registration form, resulting in significantly faster navigation than typical users, who are more likely to pause and read the information on different forms or fields before filling them out.

To pinpoint anomalies that indicate new account fraud, we analyze a wide variety of data points during user registration, including:

- typing speed and consistency

- time spent on each field

- mouse movements

- typing speed and acceleration

- errors and deletions while typing

- form familiarity

- input methods (typing vs. copy/paste)

- and many more

User property distribution

Just like sudden changes in an individual user’s properties such as their device and OS can indicate an ATO, a shift in the properties of an application’s overall user properties could signal that the application is being targeted by a large-scale fraud campaign.

For example, a surge in registrations from a specific device type could indicate that a fraudster is using bots or automation to scale registration fraud from a limited number of devices — especially if those device types represent only a small fraction of the application’s typical user base.

To detect anomalous properties that elevate risk or may indicate the presence of a coordinated attack, Transmit Security creates profiles of the device types, OSes, device attributes, city and country where users are located and many other properties in our customers’ application users, which we leverage in our machine-learning models to calculate risk during account registration.

Geolocation analysis

Just like an unusually high number of new account registrations from a specific device type can be an indicator of fraud, a sudden and unexplained increase in registrations from a specific location can indicate that those registrations are coming from a single fraudster or a group of fraudsters working together.

To help detect these scenarios, we analyze how the distribution of new account registrations compares to their usual distribution and develop features that help spot anomalies in usage bases. This process of transforming raw data into features that can be used to create a predictive model is known as feature engineering, as we explain in this blog on detecting user anomalies.

Bot and emulator features

Signs of bot activity during account opening are a strong indicator of attempted fraud, and sophisticated, human-like bots increasingly require AI-based detection in order to distinguish them from legitimate users. Our machine-learning models are fed with information gathered from a range of signals including low session duration, concentrated mouse clicks, fast typing speeds, and others outlined in our bot detection brief.

The use of mobile emulators during account opening is another key indicator of fraud, as fraudsters can use them to scale attacks by creating a large number of new fraudulent accounts that appear to be opened by users on different, clean devices. Our account opening models detect emulator usage by collecting and analyzing information about device build, SIM and carrier reputation and behavioral analysis.

Browser features

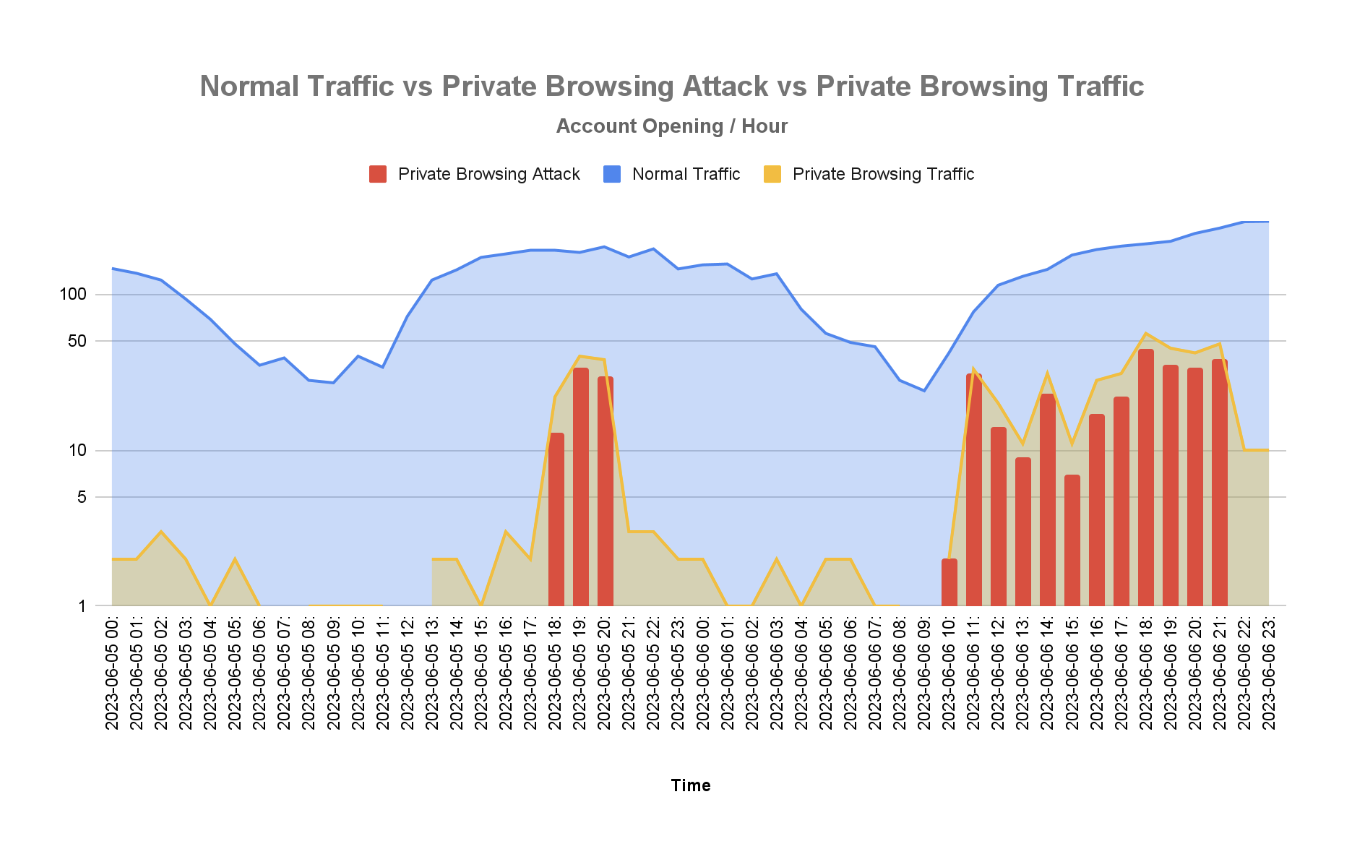

Taken on their own, the use of a private browser is not an indicator of fraud, as legitimate users often use private browsers to avoid being tracked and opt out of targeted ads. However, private browsing may elevate risk level, as it randomizes browser attributes that are used to create device fingerprints — enabling fraudsters to evade rules that prevent high-velocity registration from a single device or detection that leverages global intelligence on known malicious devices. As a result, private browsing detection in individual users and trends in overall usage of private browsers can be used in conjunction with other risk signals as a factor in detecting suspicious behavior.

Developing new account fraud models

Ultimately, machine-learning models are only as good as the data they train on. As a result, data that is collected for training our algorithms must first be preprocessed to deliver the best possible detection model.

For example, if the data collector fails to retrieve most of the raw data on a device in a small percentage of cases, training the algorithm on those cases could weaken the model’s ability to predict whether an action taken during registration is fraudulent or not.

After filtering out the data that is not optimal for training, we develop our algorithms based on the data we retained.

Analyzing machine-learning models

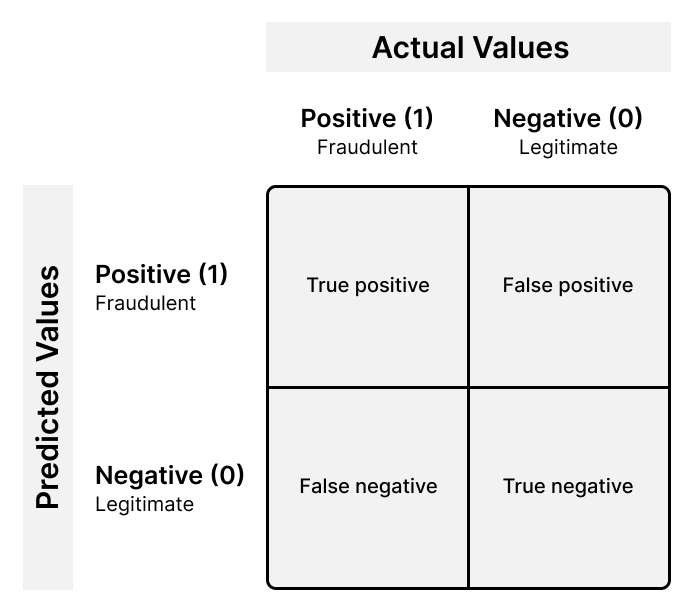

We measure our algorithms using both human analysts that monitor the model’s performance and annotated data that was collected. Depending on the amount of labeled data we have available for the tenant, the algorithms we use may be supervised — meaning that they are trained by feeding the model with labeled data — or unsupervised — where the algorithm is learning from unlabelled datasets. With supervised learning, labeled data from the customer or security research review is used to compare true and false positives and negatives against those predicted by the algorithm. One such technique is a confusion matrix (shown below).

From there, we can calculate different metrics, such as the model’s performance and how accurate its positive predictions are.

Unsupervised machine learning, however, lacks labels and requires a different approach. Instead, we use different matrices to determine the performance of the model — measuring, for example, whether a request that was predicted to be malicious contains especially unique properties or properties that align with known malicious requests.

Once we have gathered insights on the model’s performance, we can use them to optimize it based on the specific metrics required by the client, such as false positive rate or the maximum alerts provided per day.

For example, one customer might want to catch as many events as possible, and thus would like to optimize its recall (also known as true positive rate) for a 0.5% daily maximum. Another might want to aim for a precision (which shows how often the model is correct when predicting a targeted class) of at least 90% daily, and a third may prefer an F1 score — a weighted average of recall and precision — of at least 90% daily. Based on the client’s requested metrics, we configure the correct threshold per user. A more detailed explanation of precision, F1 and recall is provided in this post from Towards Data Science.

For all models, we maintain full privacy of the customer’s data, which does not leave our secured environment at any stage of development or delivery.

Monitoring machine-learning models

Once a model has been tuned and deployed, we provide daily checks of the model in production using intelligence from our security analysis team on how well the algorithm is generalizing to new and emerging threats, and to maintain various other metrics for optimal performance.

New updates are pushed daily that tune the model to deliver the most up-to-date protection, but as user behavior evolves, new data is collected and we receive data from the customer on false positives and negatives, we may wish to retrain the model to provide better results. In addition, as our Security Research Team works to develop more complex and interesting features that help the model to better detect malicious activity, we may need to retrain the model on the new features to reap the benefits of our evolving detection techniques.

When we believe that a better model can be deployed, we redevelop the model using the same steps outlined above.

Harnessing machine learning to detect new account fraud

As fraudsters continue to leverage new account fraud for a variety of malicious activities — and as synthetic identity fraud and other sophisticated techniques make new account fraud harder to detect — advanced machine-learning models are increasingly necessary to detect fraud during account opening.

By detecting anomalies within user properties for specific applications and their behavior on registration pages and analyzing requests for signs of bot and emulator detection, geolocation anomalies and browser features, Transmit Security provides robust machine-learning models for pinpointing fraudulent behavior from the moment users begin interacting with an application.

To find out more about how Transmit Security can protect your account registration process, check out our case study on how a leading U.S. bank uncovered thousands of registration bots reduced new account fraud by 98% using our Detection and Response Services.