Protecting user accounts from fraud and takeover is a decades-long problem that becomes more complex as fraudsters gain the sophistication and skills to build tools and technologies that even novice criminals can leverage to enact account and data breaches without writing a single line of code.

VentureBeat recently provided a detailed example of how ChatGPT can generate sample code for an email phishing campaign within seconds — in addition to providing an enticing, grammatically correct email that could be used to launch that campaign.

Even tech giants are vulnerable to these kinds of attacks. In late 2022, a phishing campaign dubbed “Meta-phish” successfully bypassed email security checks and collected user information to enact a massive number of account takeover attacks on Facebook and Twitter users.

No company — whether in tech or another industry — can expect end users and customers to avoid such social engineering traps, so leaders across industries must work to solve this problem.

The good news is that with the right technology and expertise, service providers can stay ahead of the curve and effectively hunt down sophisticated attack methods in the wild. Account takeover attacks can be detected and mitigated using machine learning, which is considered to be the most effective approach service providers can take to combat the evolving ATO problem at scale.

Harnessing machine learning to prevent ATO

This blog will cover multiple aspects of using machine learning to prevent account takeover by defining the problem, explaining the advantages of machine learning over heuristics and rule-based models, and provide a real-world example that demonstrates its efficacy.

What is account takeover?

Advanced technologies and software vulnerabilities can be used separately or together by bad actors seeking to bypass identity security systems and gain access to user accounts. This access gives attackers the ability to perform various malicious activities, such as money wiring, credit card information theft. These activities, which are usually financially motivated, can result in catastrophic damage for both the business and its customers.

Our best, strongest tool for solving this problem is the information and context we have about the common usage patterns of an application and the specific patterns of its individual users. Armed with this information, we can detect anomalies in how specific users interact with the service, which will be most commonly seen by deviation from known patterns or history.

Finding anomalies in a specific user’s behavior is harder today than it’s ever been before. Users today have a higher variance in their behavior, and thus a more robust account takeover detection solution is needed to take the full historical profile of users into account, considering both their usual identity and their behavior—which is where machine learning (ML) comes into play.

Today, account takeover systems frequently rely on rule-based detection methods, which are:

- Applied to all users as general rules

- Human-generated, requiring expensive and time-consuming maintenance and research

- Prone to producing a high rate of false positives that erroneously tag genuine users and false negatives, which are equally or more damaging as they grant account access to fraudsters

Machine learning vs. rule-based detection

So why does a ML-based strategy triumph over other account takeover detection methods? The answer lies in its own advantages.

- Robustness – These algorithms have the ability to detect complicated connections between different data points to find complex anomalous patterns that humans creating heuristic rules might not find by themselves. Therefore, ML algorithms are robust enough to withstand changes in distribution and new attack vectors.

- Adaptation – ML models can leverage up-to-date data to adapt swiftly to changes in attack vectors and customer traffic, while humans often rely on preconceived notions that may not stay true over time.

- Efficiency – The more traffic a fraud detection system receives, the more it must update its rules, requiring more analysts to research these rules. In comparison, machine learning does not require any additional support to analyze additional events.

- Accuracy – The more data is provided to the algorithm, the smarter and more accurate it will become.

- Fewer false detections – Since the algorithm learns behavioral profiles for each user, it is less prone to false detections that can result when global rules are applied to unique, individual users.

Problem type: novelty detection

The type of problem we solve is defined in the AI field as the problem of novelty detection. This is a type of anomaly detection method that uses data that does not contain any outliers (for instance, a legitimate user behavior data) as input to check if new data points lie outside this normal set of data.

How we represent a user’s behavior and properties

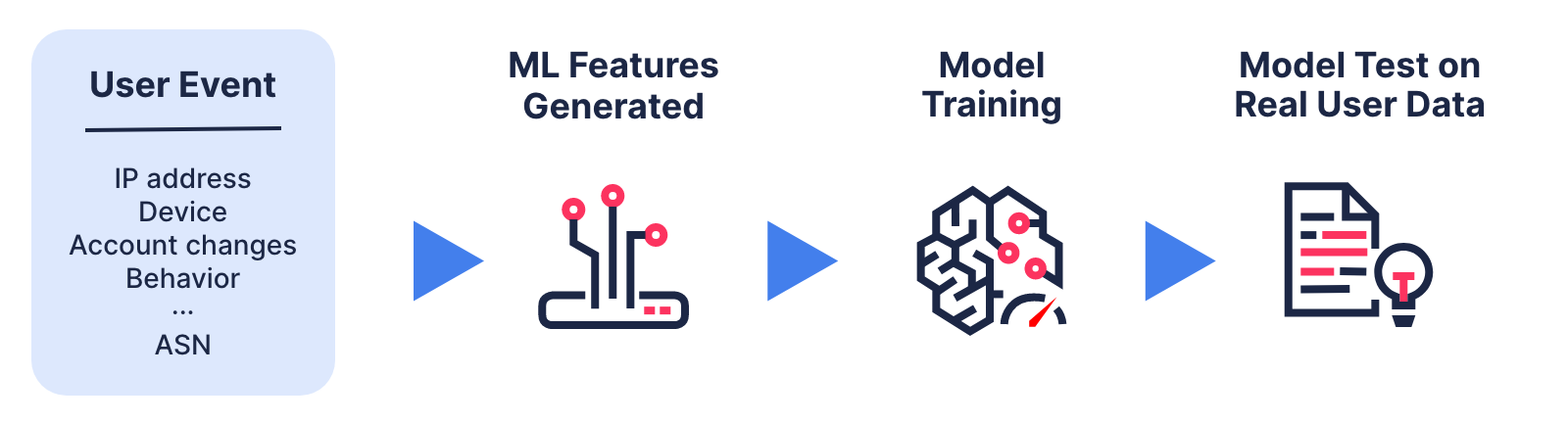

To produce the model, we first feed it with the data we want it to “learn”— i.e., the user activity data. Through the process of Feature Engineering, raw data points about the user’s behavior — which are known as features — are transformed to optimize the algorithm and ensure a fairer and more accurate result towards account takeover activities.

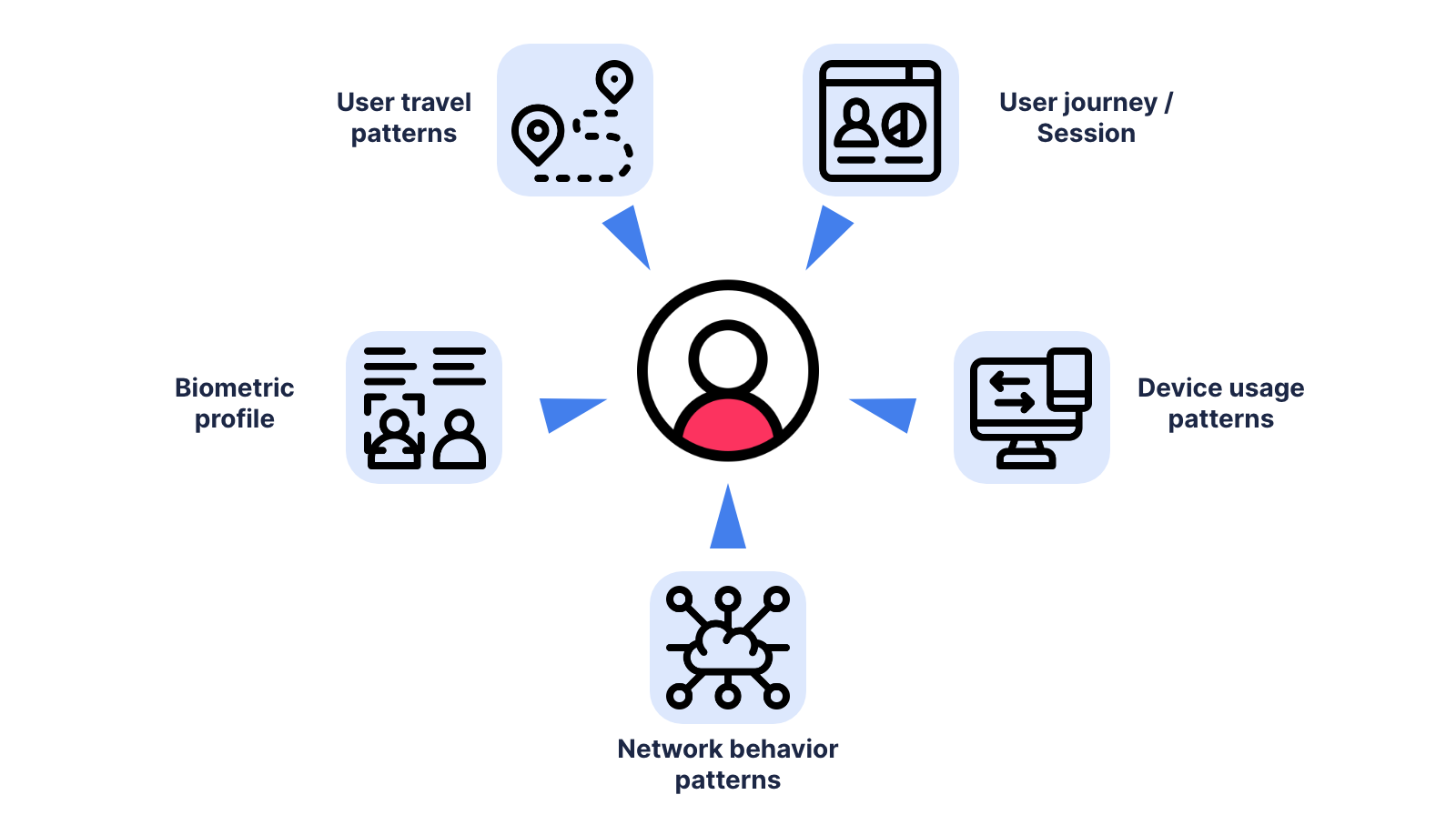

Below are some examples of features we input into the machine learning engine of Transmit Security’s Detection and Response service:

- Activity journey features, including the user’s normal activity hours, client info and its usage frequency (web browser, remote desktop connection, etc.), usage patterns during application interaction and more.

- Geo features, which relate to a user’s location, including their connection location, time zone and the application usage frequency from this location.

- Network features related to the accessing network, such as network reputation, usage of VPN, Tor browsers and hosting ASNs, previous activity patterns from the current IP address and more.

- Device features, which calculate device fingerprints for trusted devices and device reputation in order to detect the use of suspicious tools.

- Behavioral biometric features, which detect and calculate unique user device interaction patterns, such as the speed and angles of user mouse movements or typing speed, compared to previous user activity in the application.

All these and many more parameters are measured over time to create a user profile for each account owner in the application. These profiles are then used by the Transmit Security risk engine to determine if an action performed by a user’s account is legitimate or not.

Training the detection model and observing results

The process of optimizing the model, given the task at hand, is known as “training” the model. The training stage involves iteratively changing the parameters of the model to maximize a given metric of success. At each step, the model is fed with data in an attempt to change these parameters until the model is tuned.

After finishing the training stage, the model is optimized on regular user data, and we advance to the stage of testing where we test the trained algorithm. These tests utilize relevant attack cases from both known malicious behavior detected in our customers’ environments as well as artificially created samples known as synthetic data.

Hunting down bad actors in real life

Now, let’s demonstrate the capabilities of the novelty detection model in a real-life use case.

The case involves a large US bank customer who usually connects from a specific location (Connecticut, US), but also connects from several other countries, including Canada. This same individual has two known devices: a mobile device and a PC. The user has several IPs related to him, usually connects between 10 a.m. to 9 p.m., and rarely uses VPN connections. Furthermore, he usually uses a Chrome browser and displays specific mouse movements at a certain range.

This behavioral pattern was suddenly disrupted when our system received an event from this user from an IP address in Taiwan, with an unknown device, new browser type (Edge) and slower and less varied mouse movements compared to the user’s normal patterns. The time of connection was different from the user’s usual login time, and the device contained properties (new languages and fonts) that his previous devices did not. When this event was detected by our model, it triggered a recommendation of “Challenge.” Recommendations such as these can be used to step up the user.

Model explainability in machine learning

Machine learning algorithms are often referred to as “black box” algorithms since many of these algorithms do not explicitly provide the reasoning for their verdicts. For modern risk and fraud detection systems, this is unacceptable. In order to bridge this gap, the field of model explainability (or explainable AI) has risen, including the use of SHAP values, which provides a specific method for explaining how different factors contribute to the predictions of a given model. Using SHAP values, we can obtain not only a verdict but also list the features that affected the verdict and their order of importance.

The need for innovation in the new threat landscape

Remember: attackers will only continue to get more sophisticated as they leverage the advancements in technology that occur every day. As new evasion tactics and techniques emerge, static rule sets quickly grow stale, requiring a more agile approach to detection that is continuously updated to adapt to detect and analyze changing attack patterns. Machine learning algorithms are critical for effective user anomaly detection and provide a robust, reliable and scalable detection solution to face modern account takeover challenges