Although bad bot traffic now makes up almost half of all Internet traffic, you might not guess it if you’re still using rules-based bot detection. Sixty-five percent of these bots use evasive tactics, making malicious bots harder to detect and rendering classic approaches to bot mitigation increasingly ineffective — and demanding that businesses switch to machine-learning (ML) based methods to control the growing threat.

But although advanced ML-based techniques can help protect against bot-related threats, such as credential stuffing, new account fraud, credential cracking and other forms of fraud, creating effective ML models requires detection and response vendors to first solve the key challenges of ML-based detection.

This blog post will explain how our Research Labs overcame these challenges in order to improve ML-based bot detection, the semi-supervised ML techniques and features we use in our machine-learning based bot detection models and how we validate bot detection results.

Challenges of bot detection using machine learning

The main challenge complicating ML-based bot detection is labeling or label confidence. In order to develop effective ML models, we need a large amount of labeled data that can be used for training, testing and validation.

However, this is becoming a challenging task with regard to bot detection. Although we have rock-solid labels for different bot indications from our collected features, those indications usually represent the “stupid” bots, which our rule engine and automation tests can easily handle and detect. But what about the sneaky ones that are becoming harder to detect?

Behavioral bots that use random mouse movements, advanced automation frameworks that expose less information about requests and click farms used to assist bots by completing CAPTCHA and other challenges represent just a few of the evasive techniques that can cause bots to easily sneak under the radar of classic detection methods.

These techniques make it harder to collect the data needed to detect bots, but in the end, even bot operators using advanced tools and techniques leave us some breadcrumbs to work with. Based on the assumption that all types of automations hold similar properties, we devised a plan to overcome the labeling challenge: by analyzing data from all of our known bots, we might find which available data points can provide indication of some sort of automation — an interesting challenge to undertake.

Currently, we have instances with hard positive labels (bots), hard negative labels of non-bots (human users) and a wide field of unlabeled data. With this in mind, we chose to use semi-supervised ML techniques, one of the three categories used for training a model in the world of machine learning, as explained below.

- Unsupervised learning regards the problem of finding an interesting structure in our data set, such as segmentation into groups with common characteristics (clusters). Its name derives from the fact that during model training, we don’t use labeled data, since in most cases we don’t have them and want to discover interesting insights within our data, like grouping types of customers.

- Supervised learning is used to predict a number (regression), a class (classification) or a mixture of both. For instance, it may be used to predict a number and afterwards create a threshold over the output to create classes or predict probability. During the training of a supervised learning model, we use only labeled data. An easy example of a use case for supervised learning is predicting the price of a house based on parameters like size, number of bedrooms, income level of the neighborhood or other factors.

- Semi-supervised learning (SSL) uses a mixture of supervised and unsupervised learning. In addition to unlabeled data, the algorithm is provided with some supervised information – albeit not necessarily for all examples. Often, this information will be the targets associated with some of the examples.

Considering the data available to us, SSL techniques provided a way to gain more information that we could use to supplement training on the hard labels, since there might be features and indications from the hard positive labels that imply that we’re facing a bot instance which we have not yet taken into consideration.

Semi-supervised learning techniques

There are many SSL techniques, including building neural networks with augmented functions on the unlabeled instances, assembling different models that deal with each data point differently or creating the labels beforehand using unsupervised clustering. The majority of current literature on the topic addresses the problem of SSL in regards to deep learning, but we’re handling it here with tabular data, which allows us more flexibility and deeper insights.

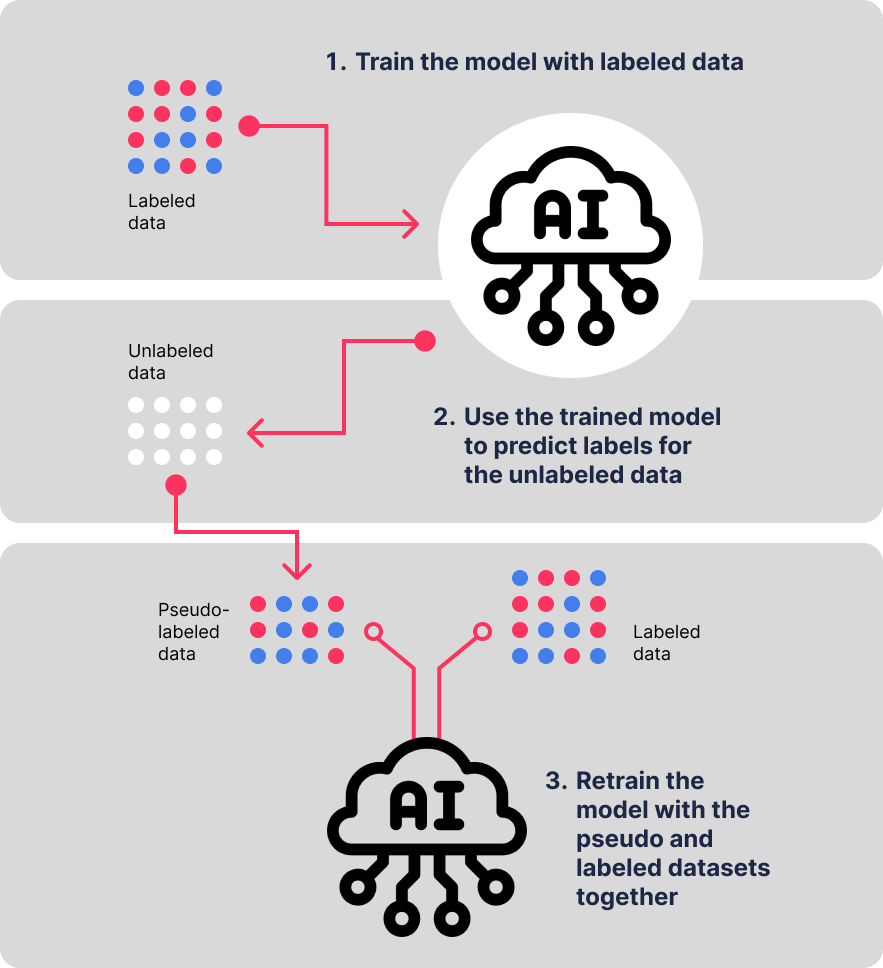

The technique we propose here is called pseudo-labeling, which we derived from its implementation on neural networks. Its concepts can be applied to classic ML algorithms such as logistic regression and boosted tree models, such as XGBoost, CatBoost and others.

The main idea is simple. First, train the model on labeled data, then use the trained model to predict labels for the unlabeled data — thus creating pseudo-labels. Finally, we combine the labeled data and the newly pseudo-labeled data into a new dataset, which is used to retrain the model.

Features of machine learning–based bot detection models

Bot detection models use a variety of interesting data points to detect malicious bots. These data points can include user behavior and user agent information, as well as other factors, such as the characteristics of the user’s device and their network activity. But we want to add as many data points as we can, so the model can learn and combine features that we haven’t spotted before.

In the Transmit Security Research Labs, we performed extensive research to collect these novel features, which we can segment into a few categories:

- Behavioral data, which is related to the users’ interactions with their device, such as typing speeds, time deltas between keystrokes, mouse movement speed, mouse movement acceleration, curvature angles of mouse movements or the variance of change in those features.

- Device data, including device characteristics that we retrieve by performing various performance tests, from CPU to audio card and GPU.

- Network data on various network features and enrichments like IP location, IP ASN type (such as hosting server or proxy) or IP ASN vendor (for classifying suspicious vendors).

- Velocity data such as the rate of registration attempts, the number of actions submitted by a unique IP address, the amount of successful registration or failed login attempts, the amount of clicks or transitions between pages that took place within specific time periods. These counters are aggregated by IP, device fingerprint and user ID.

Validating bot detection results

If you recall, the main goal of these experiments was to detect bots that we haven’t detected before. This raises the question: how can we validate if a new prediction made by the bot detection model is accurate?

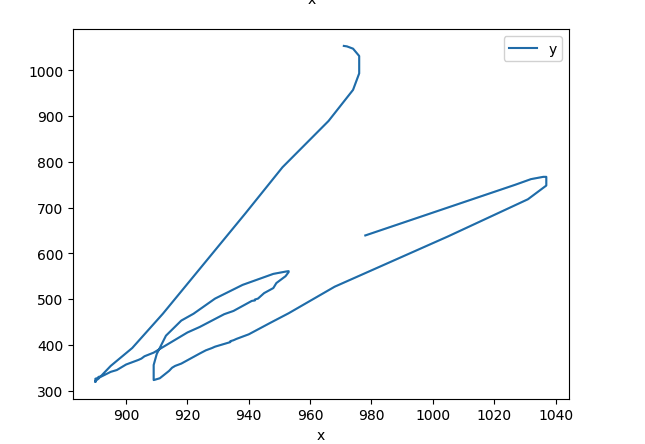

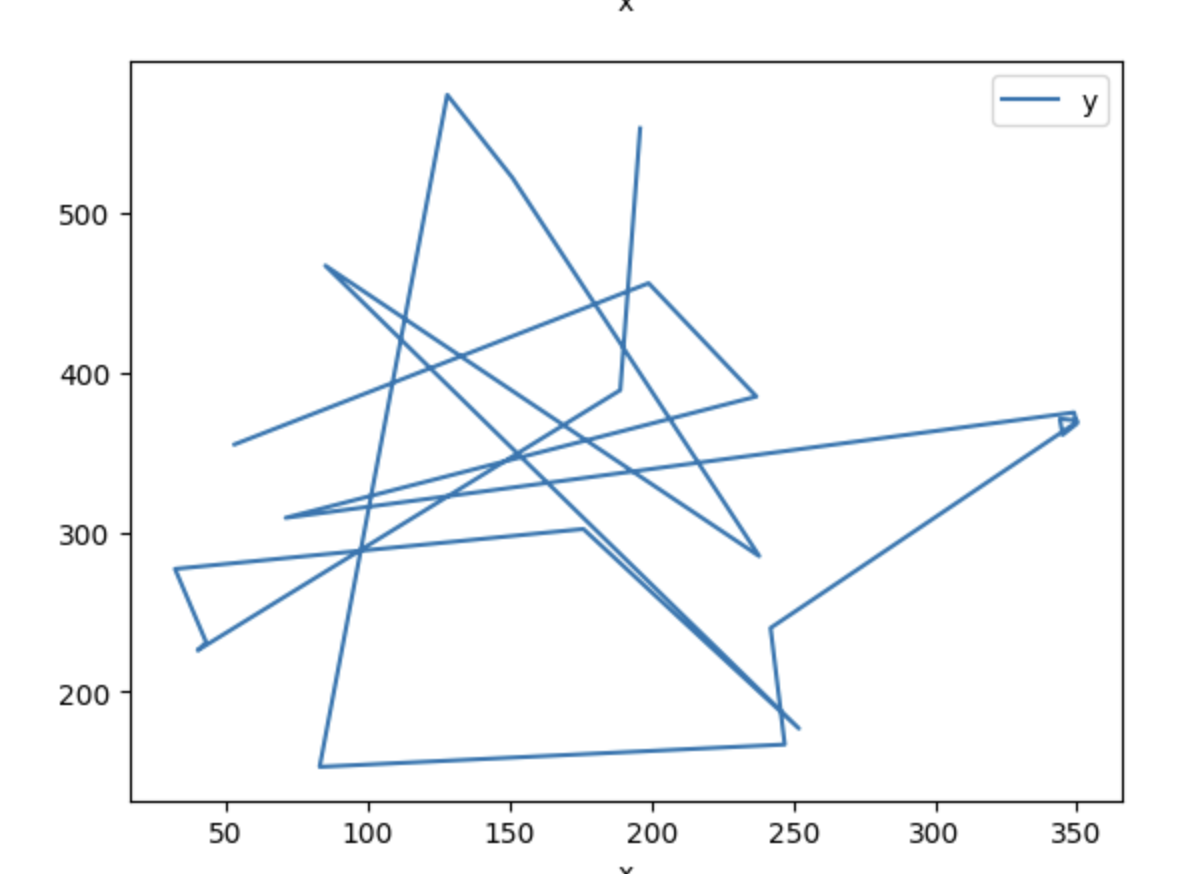

To validate these results, we took samples of new bot instances and plotted the movement of their mouse movements and behavioral data, which we compared to human instances. At the same time, we created parallel automations that we know we have not detected before in order to determine how the model is generalizing to new data.

Below, you can see mouse movement plot examples that show the difference between bot and human movement on a registration page:

Based on these experiments, we were able to further improve our model’s ability to detect anomalies that can indicate sophisticated bots, ensuring that we can continue to stay ahead of emerging evasion tactics.

Conclusion

In order to detect malicious bots, bot detection models use a variety of interesting data points, which can include user behavior and user agent information, as well as other factors, such as the characteristics of the user’s device and their network activity.

However, classic rule-based bot detection is based on common combinations of features — meaning that a bot that does not adhere to those specific combinations of characteristics will evade detection and be tagged as negative. And, as fraudsters continue to deploy increasingly sophisticated techniques to escape detection, this false negative rate will only continue to rise.

This is in contrast to ML and AI-based models, which make their decisions from training on millions of examples in order to learn different combinations that a rules engine would never perceive as bot indicators. This means that they are capable of keeping up with new fraud techniques as they emerge, without the need for constant manual tuning and analysis that classic detection methods require.

By analyzing a wide range of data points and applying advanced AI techniques such as semi-supervised learning, deep learning, security research and threat intelligence, bot detection models can accurately distinguish between human and bot activity and protect against malicious bots.

To find out more about Transmit Security’s bot detection capabilities, check out our bot detection solution brief or read our case study from a leading US bank that was able to detect 500% more bot attacks using our Detection and Response Services.