The job of a fraud analyst is not an easy one. As professionals responsible for identifying, investigating and preventing fraud, it’s crucial that they can quickly analyze data to stop fraud as it’s happening and investigate claims after they’ve been detected or reported.

Often this requires not only the use of fraud detection software — which may require multiple solutions — but risk management and statistical analysis. In the process, they typically work with other departments such as security teams, legal and compliance. However, this kind of collaboration is often complicated when the rules that govern fraud detection and mitigation are too complex or opaque to effectively update or even understand.

To solve this problem, Transmit Security has designed a rules engine for our Detection and Response Services that provides actionable recommendations along with transparency into the reasons behind them. This blog will dive into how our rules engine calculates these risk signals, how we improve visibility into its decisioning logic and how it can help analysts and security teams investigate and mitigate fraud in their applications.

The complexity of decisioning logic

Distinguishing between legitimate and fraudulent behavior first requires gathering data on an application’s users and how they are interacting with the application. Some examples include:

- Device: browser language, OS settings, patches, carrier, GPU

- Network: IP address, user agent, proxies, VPN, data center

- Global intelligence: IP reputation, device reputation, data center reputation

- Authentication: pass/fail/abandon, authenticator method, velocity

- Account changes: logins, transactions, password changes

- App activity: page sequences, action patterns, usage times

- Behavioral biometrics: mousing patterns, touchscreen patterns, keystroke dynamics

However, a single data point is not enough to accurately assess risk, as even a seemingly simple task like determining a user’s location may require multiple data points. For example, an IP address could be used to estimate a user’s location, but fraudsters can cloak the geolocation of their requests via IP spoofing or proxies. Adding to the challenge, some ISPs use shared or dynamic IP addresses that make it harder to discern where a specific user is located.

Enriched telemetry and context

For more reliable assessment of a user’s location, this raw data must be enriched with other information, such as GPS data from mobile devices, the strength of nearby Wi-Fi access points or the use of proxies. Because different solutions may use more or fewer data points or combine them in different ways, the accuracy and value of these signals will vary based on the solution.

While more valuable than raw telemetry, enriched telemetry is still not enough to make a ruling on whether a user’s behavior is suspicious — even when compared against a user’s known behavioral patterns. Users may travel to new locations, switch devices and vary their behavior in a variety of ways. Challenging them with step-ups in each of these scenarios would make for a poor user experience.

To avoid adding too much friction, signals must be contextualized and aggregated by tools that often specialize in different methods of detection, requiring one solution for bot detection, another for threat intelligence and another for behavioral biometrics — just to name a few. This makes gaining a complete picture of all the information needed for effective decisioning a difficult task.

Rules spaghetti and black-box algorithms

To gain a full picture of all the information that could be used to detect fraud and determine how to respond to requests, teams must often decide for themselves how to integrate different solutions and combine their risk assessments. Creating these heuristic rules can be a long and complex task, and rules can quickly become outdated as fraudsters develop new workarounds to evade detection.

And while machine learning (ML) can be used to efficiently build decisioning logic, find more complex patterns in behavioral anomalies and provide more clear-cut analysis of suspicious behavior, ML algorithms are often black boxes that don’t provide insight into the reasoning behind their decisions. This makes it difficult to spot false positives and false negatives until long after fraud has already occurred and impedes investigations into claims of fraud or identity theft.

Whether dealing with rules spaghetti across a variety of siloed tools or opaque ML algorithms, the result is the same: the decisioning logic used to detect and mitigate fraud is often hard to understand and explain, and even harder to update in the face of changing threats.

How Detection and Response evaluates risk and trust

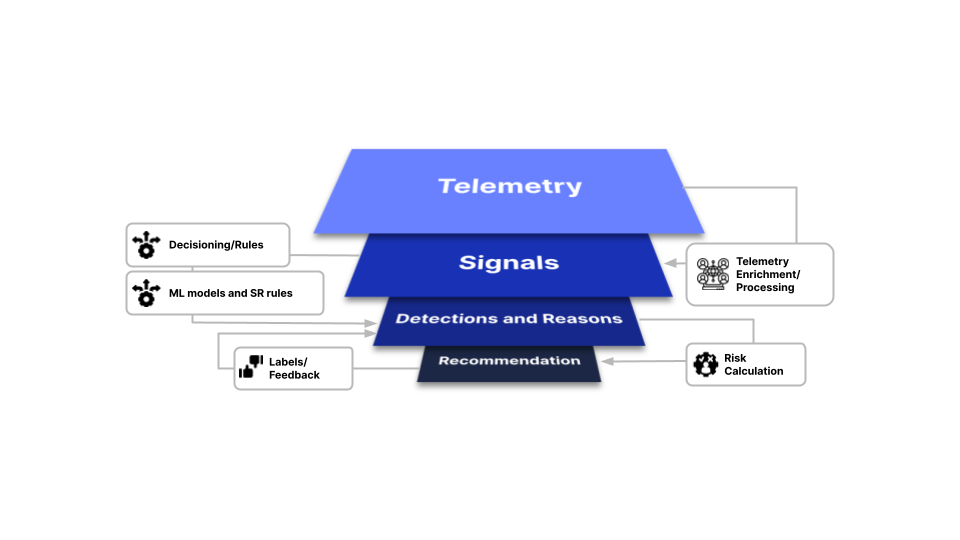

The decisioning logic for our Detection and Response service can be thought of as a funnel that aggregates telemetry from a variety of sources and distills numerous risk signals and multiple detection methods into recommendations on how to handle each request.

Throughout this process:

- Raw telemetry, such as IP address, user agent, screen measurements and other information, is collected on the client side and sent, unprocessed, to the Transmit Security Risk Engine for enrichment.

- Signals, such as network type, geolocation, OS and more, are created from our analytics and heuristics, as well as third-party data sources.

- Detections of bot behavior, cookie reuse, IP velocity and other risk and trust indicators are made using our ML-based risk engine. These detections are compared against any custom rules or labels provided by the customer to determine how requests should be handled and the reasons that influence those decisions. No black boxes!

- Recommendations to Trust, Allow, Challenge or Deny each request are returned as a categorized representation of risk calculation.

All raw data, signals, reasons and recommendations are externalized using our UI or APIs and delivered in real time. The top reasons for each recommendation are determined using methods from a field of machine learning known as model explainability and may include challenging a request due to impossible travel or allowing a request made with a trusted user device.

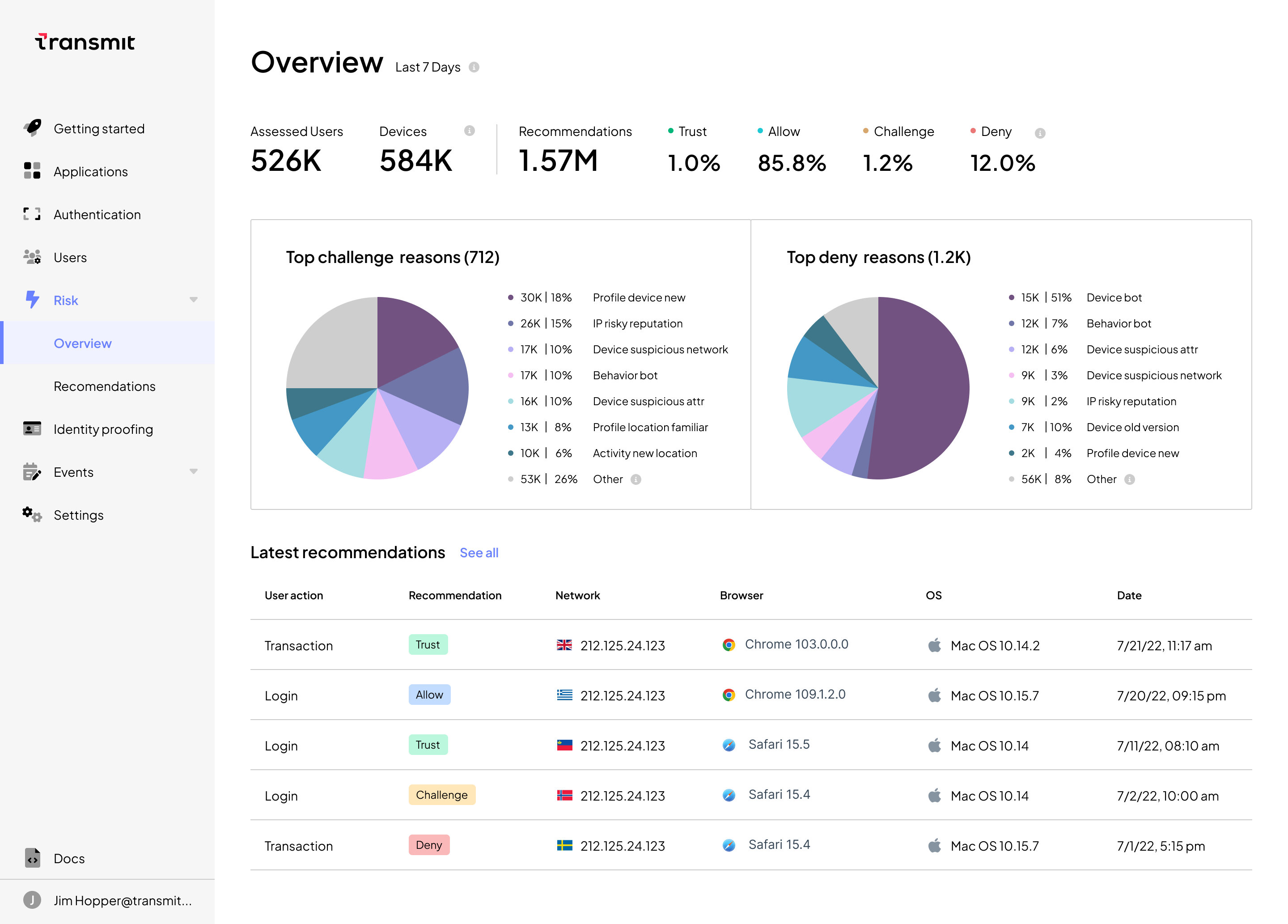

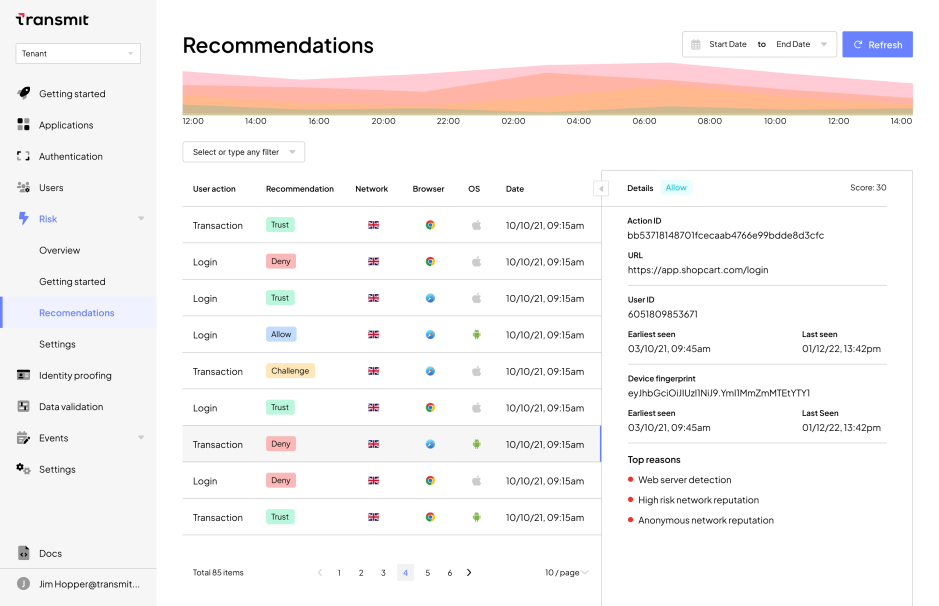

Within the Detection and Response UI, administrators can access analytics to easily:

- Investigate specific fraud claims by viewing a real-time list of each of that user’s requests along with their accompanying recommendations and the reasons for them

- View the distribution of Trust, Allow, Challenge and Deny recommendations over time to quickly spot large-scale attack campaigns

- Filter for specific recommendation types or within specific moments of the user journey in order to drill down into application-wide patterns of suspicious behavior

- See a breakdown of the top reasons for challenging and denying requests

With this contextualized information, businesses can more easily understand decisioning logic. They can also quickly tune decisioning using our Labels API to provide feedback on their recommendations or use our Identity Decisioning Services to quickly create, update, test and safely deploy no-code custom rules.

Tools for understanding and customizing business logic

Through sophisticated ML analysis of multiple detection methods and robust telemetry, Detection and Response Services enable businesses to consolidate the numerous fraud detection tools that complicate and obscure decisioning logic. In addition, Transmit Security simplifies the work of fraud analysts by providing better visibility into the reasoning behind its recommendations and the ability to quickly tune and update rules to respond to emerging threats.

To find out more about how Detection and Response can benefit your business, read our case study on how a leading bank was able to save millions of dollars in fraud losses, or contact an expert for a personalized demo.