If you think all bots are a menace, think again. Popular search engines, text messaging apps, and chatbots — including Siri and Alexa — would not exist without good bots: software programs that perform specific tasks to benefit a user or organization. Good bots can be used for various purposes, including customer service, web crawling, virtual assistants, healthcare, reservations, entertainment, e-commerce, and content sharing, so it’s crucial that bot mitigation techniques used by businesses do not block good bots along with the bad.

This blog post will provide a primer on good bots, including some key examples, how end users can benefit from them, and some solutions and techniques businesses can use to distinguish good bots from malicious ones.

Good bots: Examples and use cases

Types of good bots

The first chatbot ever was developed by MIT professor Joseph Weizenbaum in the 1960s, and since then, many engine bots have been developed to crawl the Internet, providing search engines like Google, Yahoo, and Bing with the information they need to create entries in search engine indexes and return relevant results in response to user queries.

Good bots can perform a range of automated tasks, such as messaging, monitoring, and retrieving information — in addition to indexing websites. Some examples of good bots include:

- Crawlers like Googlebot and Bingbot methodically browse the Internet to index and update online content for search engines and keep other valuable data, such as pricing and contact information, up-to-date and easily accessible.

- Chatbots interact with humans through text or sound and can be used for automated text messaging, customer service, personal assistants, mobile ordering, and more.

- Shopbots scour the Internet for the lowest prices on items you’re searching for. This narrows search results to save users time so they do not have to comb through individual websites to compare product prices.

- Monitoring bots are automated programs that monitor various aspects of a website or system, including metrics such as uptime, availability, responsiveness, and performance, to ensure a website performs optimally. Many tools are available for monitoring bots, such as UptimeRobot, Pingdom, and Cloudflare.

- Marketing bots are used by all SEO and content marketing software (such as SEMrush bot and AhrefsBot) to crawl websites for backlinks, organic and paid keywords, amount of traffic, and more to rank search engine results.

How businesses can benefit from good bots

Good bots provide a host of use cases for businesses, helping them improve operational efficiency and customer experience by decreasing wait times, automating alerts, and performing repetitive tasks at scale. Some of their top benefits they provide include:

- Brand awareness: Crawlers help businesses make their content, services, and contact information readily accessible to users via Internet search, helping them provide an effective and up-to-date online presence through search engine positioning, front-end optimization, and online marketing.

- Customer service: Customer service bots can be used to provide 24/7 support and quickly answer users’ questions at scale without waiting for human agents. By automating answers to common questions and other repetitive tasks, these bots can alleviate some of the workload from human customer support employees, allowing them to work on more complex cases.

- Boosting sales: By providing personalized recommendations based on users’ browsing history and purchases, good bots can be used to boost sales, increase customer engagement and help users find relevant products.

- Site moderation: Good bots help chat rooms such as Discord and Twitch moderate users, send out memes, and even archive messages. Chat services that allow pre-made or custom-made bots in their services gain an edge over competitors as users gravitate towards implementing a variety of bots into their chat rooms.

- Automation: Good bots can perform repetitive tasks automatically with little to no intervention from their creator, allowing them to perform simple tasks much faster and more efficiently than human users, such as detecting and blocking malicious traffic.

- Performance and security: Monitoring bots that alert users of significant changes or downtime can help businesses detect and prevent issues with their websites and provide valuable insights into website health and performance.

Managing bots: The good, the bad and the ugly

Just like good bots can crawl sites, interact with users and automate tasks, bad bots can weaponize these same capabilities against websites and applications to perform malicious activities like credential stuffing, price scraping, delivering spam, and creating fraudulent accounts with stolen or synthetic identities. In addition, even good bots could degrade performance or cause availability issues since constantly polling sites for new and updated content could overwhelm your server.

To guard against these issues, businesses need to implement a bot management strategy that leverages techniques for controlling how good bots interact with their sites and keeps unwanted bots away.

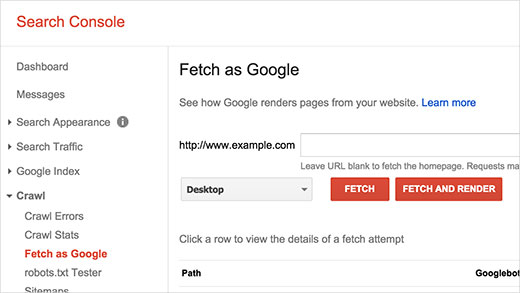

For example, search engine bots use a file called Robots.txt located at the root of a website to determine which URLs they can access on the site. Site owners can define rules for which pages or files can be crawled by placing a robots.txt file at the root of your web server to prevent search engine bots from crawling pages that are unimportant or similar and prevent polling requests from overloading their servers. They can also leverage tools such as Fetch as Bingbot in Bing Webmaster tools or Google Web Console’s Fetch tool to find out which resources crawlers can access, which are blocked, and how different pages are rendered.

Allowlists can also be used to limit access to certain resources, applications, or sites by providing an up-to-date list of desirable bots — such as crawlers and monitoring bots — while blocking other bots from getting in.

But although good bots are programmed to follow the rules provided by robots.txt and other bot management measures, bad bots will not. Robots.txt only outlines the rules governing bot access; it does not actively take measures to block bots from accessing resources. And while allowlists can block malicious bots from entering a site, malicious users will continuously search for ways to evade detection and workaround these rules.

How Transmit Security helps businesses welcome good bots

Good bots’ IP addresses will fall within the given ranges published to ensure they can access sites and help distinguish them from bad bots. However, these IP addresses may change over time, requiring up-to-date lists to ensure that good bots are not marked as malicious.

To do so, businesses can leverage intelligence from detection and response services and independent projects that maintain good bot lists. Transmit Security Research Labs continuously updates our lists of good bots to give our customers the option to allow traffic from those bots and ensure they are not marked as malicious by detection services.

Here are some examples of good bots IP lists that the Security Research Labs keeps updated:

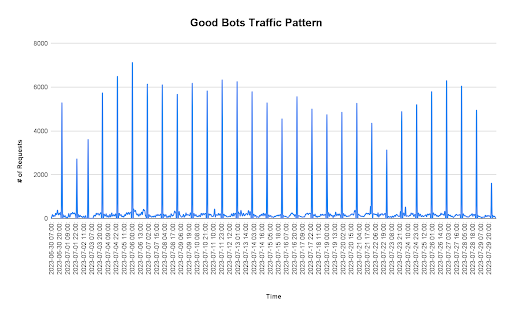

In addition, we study the behavioral patterns and requests of good bots to help better distinguish them from bad bots.

By understanding the known behavioral patterns of good bots, we can help businesses detect IP spoofing that could allow bad bots to impersonate crawlers or other good bots and identify other anomalies that indicate when bot-like behavior is malicious or risky.

This is done through the use of machine learning–based bot detection techniques and a range of other detection methods that enable us to better distinguish between good vs. bad bots and help us to detect bad bots that are using evasive techniques to masquerade as legitimate users.

Conclusion

Good bots have significantly impacted society by providing valuable information and services to users while helping businesses automate tasks, improve their web presence and scale their communications with users. These capabilities continue to evolve today, transitioning simple chatbots into powerful generative AI tools like ChatGPT, capable of providing more complex and humanlike answers to user queries.

But to reap the benefits of good bots without overloading systems or making themselves vulnerable to bot attacks, businesses must have a bot management strategy to define how good bots interact with their site and keep bad bots off of it. To find out more about how Transmit Security identifies bad bots, check out our bot mitigation brief, or contact Sales to find out more about bot management on our platform.