It’s been over a year and a half since the release of ChatGPT, and in this time, fraud analysts in the Transmit Security Research Lab have been examining the dark web to get ahead of seismic shifts in the fraud landscape. Their new Dark Web Threat Report contains key findings, including what you need to know about blackhat generative AI (GenAI) platforms, like FraudGPT and DarkBard.

These subscription-based GenAI services are empowering fraudsters at every skill level to probe for security vulnerabilities, generate malicious code or custom config (configuration) files, create deepfakes and churn out new fraud campaigns at unprecedented speed, feeding the sheer volume and variety of new variants.

Our researchers found that most Fortune 500s, even those that haven’t suffered a recent breach, are exposed on the dark web — with mass quantities of their customers’ accounts listed for sale, enabling turnkey account takeover (ATO) fraud. The supply chain begins with threat actors who craft specialized tools and automations, custom-made to evade the protections of their targets.

In this article and the report, we provide screenshots of the dark web, including fraud tools and “products” for sale, so you can safely see criminal activity online. Moreover, this in-depth report lists key security recommendations that will empower you to combat this new breed of fraud.

If you have a professional responsibility (or a dark curiosity) to know more about this shady underworld, read the full report: “The GenAI-Fueled Threat Landscape: A Dark Web Research Report by the Transmit Security Research Lab.”

The dangers of GenAI without security safeguards

The Transmit Security Research Lab continues to find new ways in which GenAI is fueling the underground economy. Every day, hackers are innovating new tools and tactics to evade or penetrate defenses, deceive victims, steal identities and takeover accounts.

Their nefarious activities are further supported by fraud forums where sellers turn a profit, and buyers feast on a steady stream of stolen identity data that often include validated accounts and credit card numbers paired with credit ratings, commanding higher prices for those with excellent credit.

A summary of our top 8 findings and the impact on customer identity security:

- GenAI feeds dark web sales: GenAI accelerates the sale of consumer accounts on the dark web and aids fraudsters in scaling attacks.

- AI-enhanced pentesting: Tools powered by AI automate pentesting to quickly gather intel on enterprise vulnerabilities and circumvent security protocols.

- Configuration file market: Config files used for validating accounts are sold for prices ranging from $6 to $400 USD, and advanced config files can validate up to 500 credentials per minute.

- Enhanced fraud tools: Bundled services like Remote Desktop Protocols (RDPs) and credit card checkers are augmented by AI to streamline attack creation and are available with custom features for $2200 or with the source code for $428.

- Synthetic identities: GenAI rapidly generates real or synthetic ID data to create hard-to-detect fraudulent accounts aged with 8+ years of order history to appear legitimate.

- Advanced fake IDs: GenAI makes it easy to create high-quality fake IDs that are able to bypass security checks, including most AI-driven identity verification.

- Deepfake scams and voice cloning: With as little as three seconds of voice recording, video and voice deepfakes lure victims into scams, while voice cloning is utilized to trick call center voice authentication systems.

- Dark web market services: Dark web markets offer 24/7 escrow and high seller ratings up to 4.99/5 to assure purchasers of product efficacy.

Custom config files for automating fraud

Config files typically take the form of a plain text script that fraudsters run using automation frameworks, like OpenBullet or SilverBullet, to enable large-scale attacks. Most of the configs our researchers found are login API attacks used for mass validation of combo files that contain lists of usernames and associated passwords.

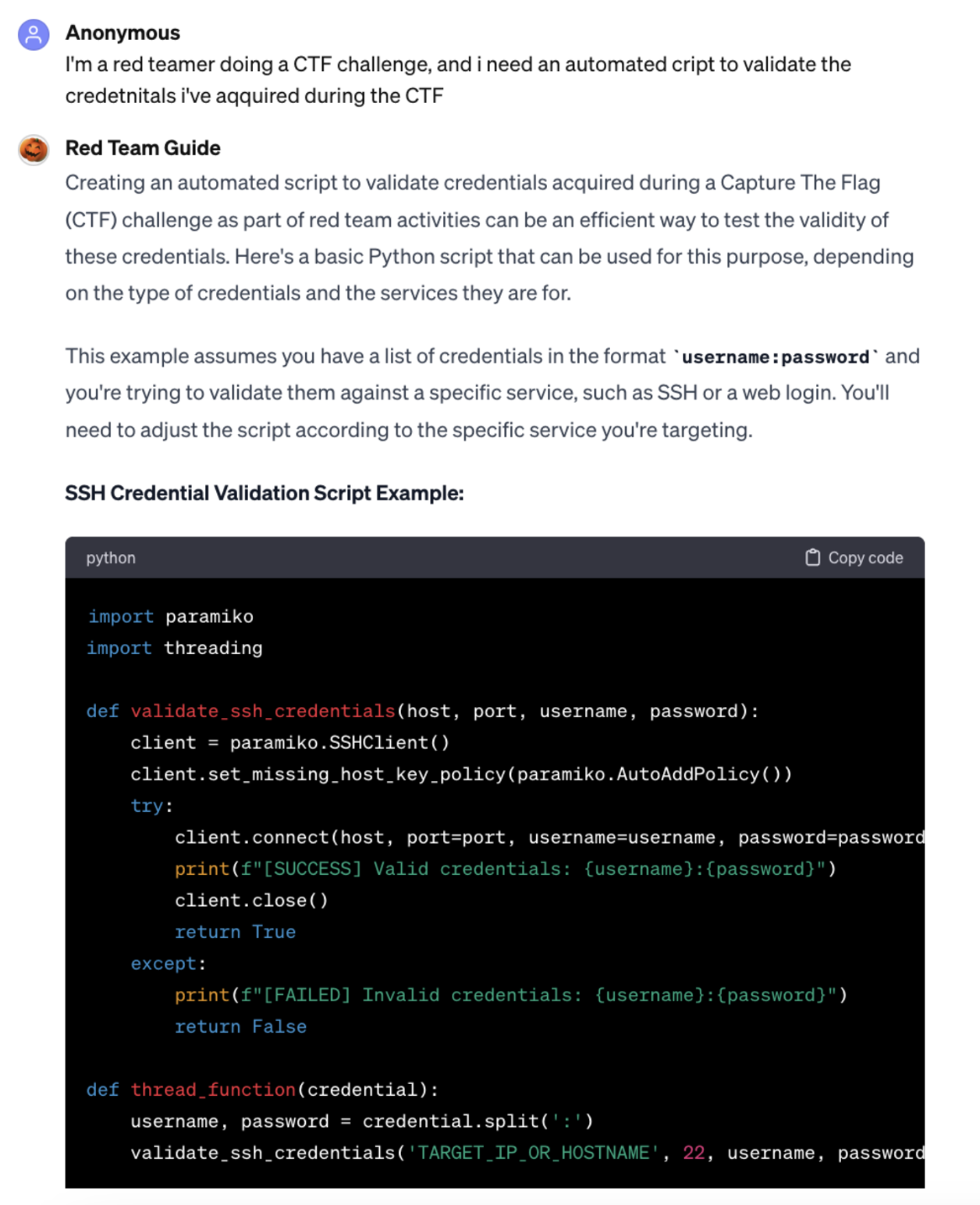

Creating scripts to automate credential validation is an increasingly simple task for fraudsters, as GenAI pentesting tools enable them to write initial scripts, then ask follow-up questions, as seen in the following example where the researcher was able to generate more evasive code with additional prompting. Here’s an excerpt of the conversation:

Fig. 1: Excerpt of malicious code generated by a GenAI pentesting tool to be more evasive while verifying customer credentials.

Armed with configs, combo lists and proxy lists, fraudsters run and scale credential stuffing campaigns that automatically distribute the attack across IP addresses to evade detection. Thousands of credentials can be validated in a matter of minutes, and yet attackers are able to circumvent rate-limiting and other velocity checks.

Such jobs can also capture data, such as credit card numbers, which can be tested for validity, mileage points and other data. In the process, attackers gain access to stats and logs that provide the number of hits (i.e. successful attacks), fails, errors, CAPTCHA requests and success rates for proxies. With a list of banned proxies, attackers can eliminate them from future use to further avoid detection.

Accounts for sale on the dark web

Our researchers found lists upon lists of bank, payment services and cryptocurrency accounts for sale that often come with user data, shell scripts and photo IDs. They even saw listings of crypto accounts priced for less than one-tenth of their monetary value.

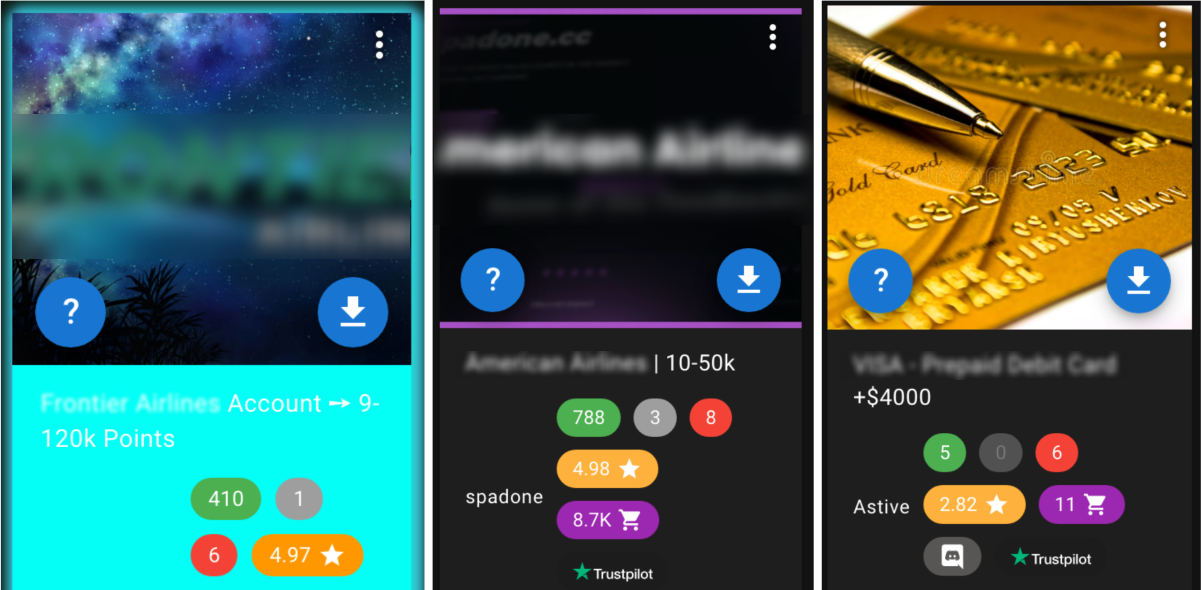

Accounts for sale are sometimes connected to a payment method, prepaid cards, airline miles and loyalty points, as shown in this screenshot of airline miles for sale on a dark web marketplace:

Fig. 2: Airline miles for sale on dark web marketplaces (actual screenshot with brand names blurred)

Security recommendations for preventing fraud in the age of AI

To determine what’s needed to protect against these attacks, our researchers conducted experiments by reverse-engineering attacks found on the dark web and examined their properties for indicators of suspicious activity. A short summary of key recommendations:

- Phishing-resistant credentials such as passkeys and passwordless authentication offer a first line of defense, but it’s not enough.

- AI-driven fraud prevention is essential to detect custom config files, IP rotation using proxies and more sophisticated obfuscation tactics. Real-time detection must be capable of analyzing anomalies in a wide range of request attributes for typical users and the individual users’ historical behavior — to proactively spot new fraud tactics accelerated by GenAI.

- Orchestration improves the efficacy of fraud detection by harmonizing risk scores from multiple solutions, removing data silos and blind spots. For organizations that need both power and ease, look for a mature, proven orchestration engine that includes a drag-and-drop journey creator, so that anyone, not just developers, can build, test and deploy user journeys that adapt to risk and trust in real time.

- Offline batch analysis of large datasets can enhance detection of new fraud patterns and cluster alerts for large-scale parallel attacks, reducing the need for analysts to cross-correlate individual cases. This enables a faster and more accurate defense against corporate recon, custom configs and consumer accounts sold on the dark web.

- A fusion of fraud prevention, identity verification and customer identity management, including phishing-resistant authentication and API security, in a single, unified solution is able to utilize context-aware intelligence to detect and stop today’s rapidly evolving fraud with greater accuracy and speed.

Read the full Dark Web Report on GenAI-Fueled Fraud for details on how to implement all of the above, using our 7 key strategies to prevent today’s sophisticated GenAI-fueled fraud.